Whether you are working on ADAS and about to move up to more advanced autonomous functions or working on the long tail of L4 autonomy, one thing is for sure: you are about to face exponential growth in the number of scenarios you need to test in order to get your autonomous product out. In a recent session on Reddit, Waymo’s CTO, Drago Anguelov, described the “long tail” the industry has seen in going from effective Autonomous Vehicle prototypes to working products. Despite the many declarations of expected releases of working products in the near future, it’s clear that the AV industry isn’t close to where it should be. The industry is starting to sober up to the fact that in order to release working products there is a need to dramatically increase the number of scenarios tested.

Whether you are working on ADAS and about to move up to more advanced autonomous functions, or working on the long tail of L4 autonomy, one thing is for sure: you are about to face exponential growth in the number of scenarios you need to test in order to get your autonomous product out.

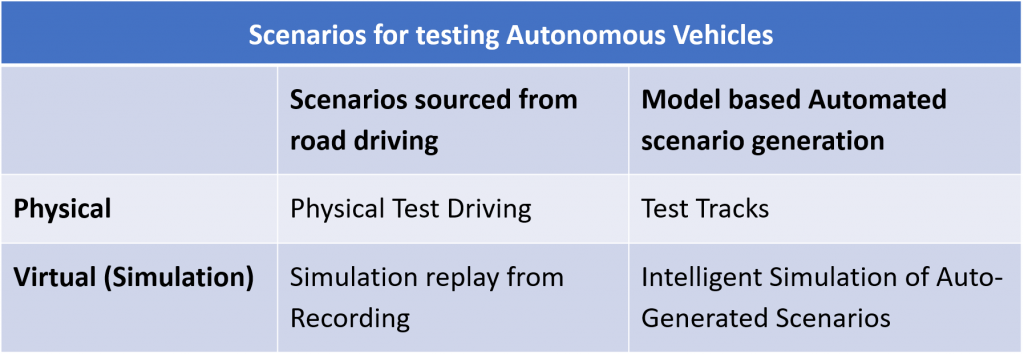

One of the major weaknesses with the existing mainstream approach to AV development is the over-reliance on physical road driving and their recordings. Obviously physical testing is needed, but is it enough? Should this be the primary focus? In these hectic times, the Autonomous Vehicle and ADAS industry has an opportunity for a paradigm shift. With most test driving of autonomous driving stalled due to Covid-19, it is time to take a step back and see how verification programs can be modified to make a leap forward in safety, efficiency and cost. See for example CEO of Voyage, Oliver Cameron.

After a long career in Verification of complex systems, I can state unequivocally that subscribing almost exclusively to a single verification method is both inefficient and counterproductive. Anyone who has worked in the fields of Verification and Validation can tell you that each set of tools has its own combination of advantages and blind spots. The goal of any verification program is to find the right combination of tools and methods to overcome the inherent gaps and utilize the advantages of each.

Physical and recorded driving are essential components of testing autonomous vehicles, but while these approaches have many benefits, they, unfortunately, have inherent gaps. To complement the gaps, I contend that simulation of model-based generated scenarios is required. In this article, I will discuss some of the gaps in physical driving, key advantages to generated scenarios and why I believe that relying solely on physical recordings is the wrong approach.

Gaps in physical & recorded driving

- There are a limited amount of times and ways to check dangerous scenarios

- In physical and recorded tests, it is difficult to create new scenarios by manipulating factors beyond weather, lighting conditions and limited changes to additional actors

- Using recordings, actors cannot respond to various changes in the EGO’s behavior

- Prohibitively high cost of physical driving & recordings

- Rare events may not really be as rare as projected

- Some errors, however rare they may be, are inexcusable and need to be tested

Before delving deeper into the advantages of model-based automated scenario generation, let’s define the term in reference to the testing of autonomous vehicles. When I discuss model-based automated scenario generation, I am referring to Coverage Driven Constrained Random Verification; the ability to capture a scenario in a high-level language and let a random generator choose places on a map, generate multiple scenario parameters and create a massive number of valid scenario variations within the constraints provided. The model is able to both randomize abstract scenarios and mix them with other scenarios. In addition, the model is able to track simulations to see that they met all the KPIs and covered all required scenarios to prove the safety case.

Exploring the relative advantages of Automated Virtual Testing

Controllable and Safe

Simulation is completely controllable and safe. In real physical driving, there are limited ways to check dangerous scenarios. In contrast, model-based scenario generation facilitates taking an abstract scenario to generate thousands to millions of valid variations. For example, a pedestrian crossing a highway is rare in physical driving, thus the number of instances of this scenario recorded by chance, during road-driving, is limited. In a test-track this scenario will have very few variations if any;

In the model-based approach, this simple scenario can be varied for multiple parameters (height, clothing, distance, speed, direction, weather, occluded areas…) and can appear in different parts of the map. It can also be mixed with other scenarios. Obviously, this critical scenario, however rare it may be, is best tested comprehensively — which is only possible using model-based scenario generation.

Even if the accuracy of sensor simulations is suspect, you can still use “sensor bypass” to test many other variations.

Flexibility

Physical tests are an invaluable resource for accurately depicting the input from the sensors, but simulations are far more flexible. In recorded drives, while it is possible to manipulate some factors such as weather, lighting conditions and adding additional actors (as long as these actors do not really interact with the scenario), it is difficult to change the responses of either the EGO or the other actors. If the EGO behavior or trajectory changes due to changes in the algorithm, other actors still react as they did in the recording, making the rest of the simulation irrelevant. So, if the algorithm changed and the EGO modified its behavior, recorded tests cannot check that the new behavior will result in a safe maneuver. In model-based scenario generation, each change can be regressed thoroughly and the actors will respond to changes in the EGO’s behavior to help prove the changes have corrected the problem, while not introducing new problems.

Unknows & Rare events

There are two problems with deeming an event too rare to test or to solve. The first is events may look rare because of inaccurate analysis of the root cause of the problem. One may conclude that specific accidents happen statistically only 10^-9 miles and are rare enough to not require fixing and focused testing, while the real source of the issue is something that happens 10^-6 miles and must be fixed. Model-based scenario generation you will inevitably find many instances of the problem quickly and be able to identify the true underlying root-cause before it causes accidents. Moreover, leveraging the nature of constrained random combinations and their ability to cover far more interesting events per mile, simulations will uncover risk areas that were previously unknown. The other issue is inexcusable accidents. A baby falling from a car in an intersection may be a 10^-10 event, but an algorithm that was not sufficiently sensitive to this extremely rare case would be unforgivable.

In a model-based simulation, creating specific scenarios, such as the case above, can be streamlined and by leveraging cloud or on-premise compute-farms, a project can run millions of scenarios.

Efficiency

An active AV development project requires a massive regression that can be run every time there is a change to the software or algorithm, be it a bug fix or a new feature. The regression needs to complete quickly to provide developers immediate feedback enabling modern development practices such as Continuous Integration. The regression requires an abundance of scenarios and variations to see that the model continues to meet the safety requirements. For example, if there was a correction to a specific scenario of merging into a highway with heavy snow, the test suite may have only a limited number of such scenarios recorded in the database and the changes in the driving of the actors is beyond the capability of recording manipulation. To test it thoroughly and release the new software requires orders of magnitude greater variation. If the project relies solely on recordings, the project would need to send the fleet to harvest this situation — after a month of focused driving; the project would be lucky to have a fraction of scenarios required. Corner cases of this (such the same scenario at higher speeds) would require infinitely more time to procure. Using model-based test generation, allows the project to get the full suite of target tests with minimal effort. Moreover, the random simulations can utilize the tests to pack ‘drama’ into many of the simulations, resulting in a far more efficient and thorough proof of the safety case.

Cost

This is simple. Driving real vehicles is costly. Simulation is cheap. With model-based scenario generation, you get more for less.

The cost of a single physical test vehicle including the HW, personnel (drivers, maintenance team, data analysis) is estimated in the hundreds of thousands of US dollars. While in simulation, each processor core can run a “virtual vehicle”. The cost of a virtual vehicle is at least an order of magnitude lower than that of a physical one.

Further to the raw cost, one needs to look at scenario density — how many interesting scenario variations do we encounter per day. The number of interesting scenarios a physical test vehicle will encounter in a typical day is between 10–100. While the number of interesting scenarios a virtual test vehicle can encounter per day using an intelligent model-based simulation can easily reach 1000–10000. a 100X advantage. If we use Meaningful Scenario per Dollar ratio, model-based virtual testing is at least 1000X cheaper than physical testing. Again this does not mean that one should abandon physical testing. But it does mean that the testing budget is better applied using the best techniques for where they are most effective.

Summary

Model-based Automated Virtual Testing is not a panacea. My company, Foretellix, has created a complete solution that enables developers to do Intelligent Automated testing with both virtual and physical execution platforms. The solution consists of an open language called M-SDL (Measurable Scenario Description Language) that facilitates the capture of abstract scenarios (the model). We provide Foretify, a software tool that takes these abstract M-SDL scenarios, automatically generates a massive number of random valid scenario variants and collects scenario coverage to efficiently prove the safety case for both autonomous vehicles and advanced ADAS systems.

Physical testing of AVs is a must, but it’s only part of the solution. Automated Virtual Testing using constraint-driven random methods in the verification program allows us to fill in the gaps from physical and recorded driving. At a time when physical testing is halted, it’s a good opportunity for developers to take a step back and consider a cheaper, faster, safer and more comprehensive approach to both raise quality and shorten time to ADAS and AV development convergence.